As the internet continues to grow, so does the need for content moderation. With so much user-generated content being created every day, it’s impossible for companies to manually review all of it. That’s where an image analysis API comes in.

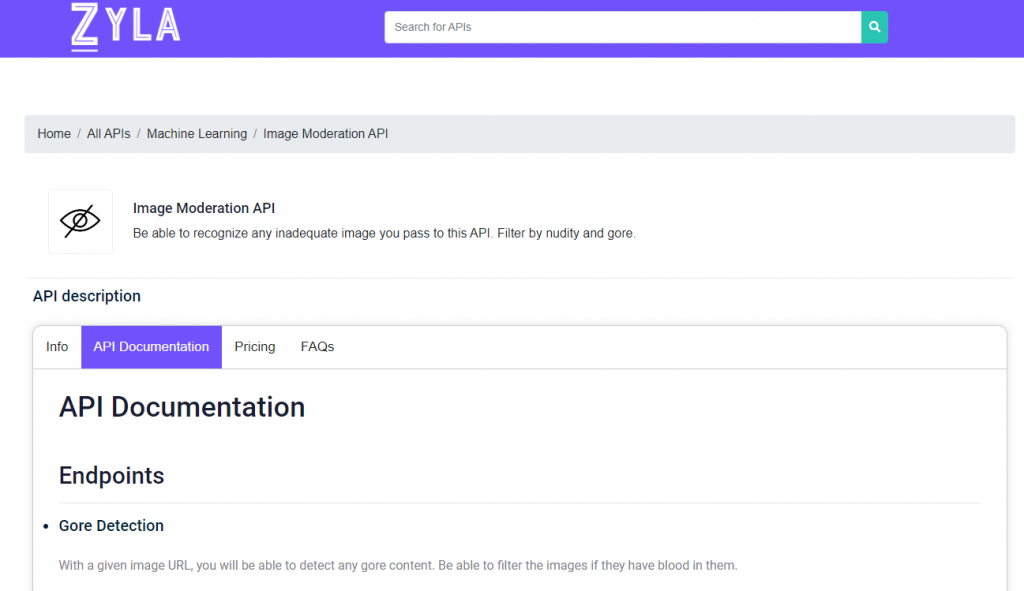

An image analysis API, such as Image Moderation API; is a tool that uses machine learning to automatically moderate content. You can use it to identify and flag inappropriate images or videos, as well as to detect potentially sensitive content. You can do this all in real-time; which means that content can be moderated as soon as it’s created.

The Image Moderation API is an essential tool for any company that relies on user-generated content. It can help you keep your content clean and safe, and it can save you a lot of time and resources.

How can an API detect inappropriate content in an image?

It’s no secret that the internet can be a dark and dangerous place. With all of the different types of content that are out there; it’s important to have a way to filter out the inappropriate stuff. That’s where an API comes in.

An API, or Application Programming Interface, is a set of rules and standards that one company uses to communicate with another company’s software. In the case of inappropriate content, you can use an API to detect and flag images that contain pornographic or violent content. This is a valuable tool for companies that want to keep their websites and content safe for all users.

There are a few different ways that an API can detect inappropriate content in an image. The first is by using keyword-based filtering. This is where a list of keywords is used to scan an image for any matches. If any of this appears, you can decide if you want to keep that image or delete it.

Why is important to keep your website as safe from abusive content as you can?

There are many reasons why it is important to keep your website as safe from abusive content as possible. For one, abusive content can be very harmful to the people who read it. It can damage their mental and emotional health, and it can even lead to physical violence. Additionally, abusive content can also damage your website’s reputation. If people see that your website is full of abusive content, they will be less likely to visit it or trust it. Finally, abusive content can also get your website shut down. If you allow abusive content on your website, you could be breaking the law and face serious legal consequences.

So why take the risk? It is important to keep your website safe from abusive content for the sake of your own reputation and the safety of your readers.

Try Image Moderation API and forget about unwanted content on your platforms!

If you’re looking for an easy way to ensure that users share only appropriate content on your platform, look no further than the Image Moderation API. This tool can automatically detect and flag images that contain nudity, violence, or other potentially offensive content. That way, you can rest assured that your platform is family-friendly and safe for all users.

The Image Moderation API is easy to use and completely affordable. So why not give it a try? You’ll be glad you did.

If you found this post interesting and want to know more; continue reading at https://www.thestartupfounder.com/try-the-smartest-image-moderator-api-available-on-the-web/