In the digital age, extracting information from websites is a common practice for various purposes, such as data analysis, market research, and content aggregation.

However, it is crucial to perform these tasks without raising suspicions or being detected as a scraper by website owners. This is where the User Agents API proves to be highly valuable.

When extracting information from websites, being identified as a scraper can lead to consequences such as IP blocking, CAPTCHA challenges, or even legal actions. To avoid these complications, it is necessary to employ techniques that mimic human behavior, this is why you need a User Agents API.

Extract Information From Websites With User Agents API

The User Agents API allows developers to simulate real user behaviors by specifying user agent headers in HTTP requests. By leveraging this API, developers can send requests that appear to come from legitimate users, making the scraping process less noticeable.

By rotating user agents and mimicking human browsing patterns, the API helps maintain a low scraping footprint, reducing the risk of detection. This API provides various user agent options, allowing developers to customize requests and emulate different browsers, devices, and operating systems. This flexibility adds authenticity to the scraping process, increasing the chances of successful extraction while avoiding detection.

Additionally, the User Agents API helps overcome website restrictions and anti-scraping measures. Websites often implement mechanisms like rate limiting or user agent blocking to deter scrapers. By using the User Agents API, developers can adapt their scraping requests to bypass these obstacles and extract information smoothly.

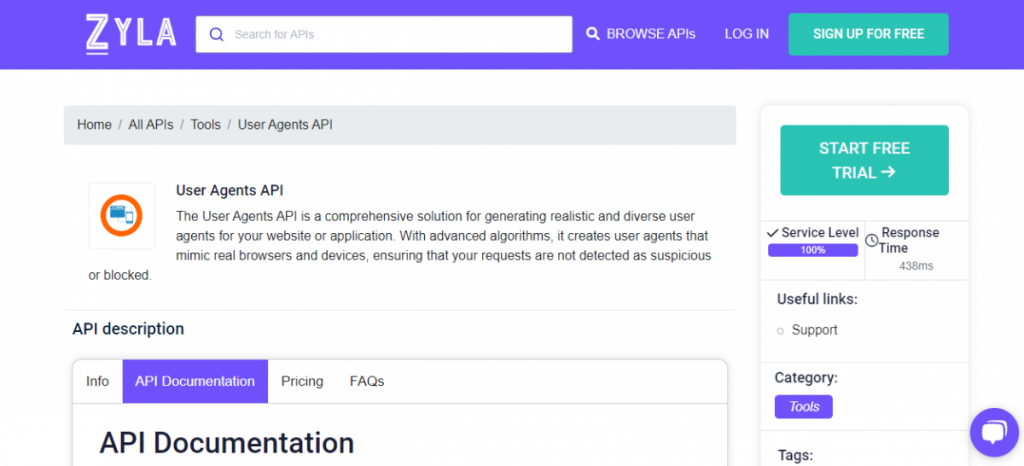

The User Agents API empowers you to generate authentic and diverse user agents for your website or application.

By utilizing advanced algorithms, the API creates user agents that closely resemble real browsers and devices. This ensures a high level of realism and credibility for your requests, minimizing the chances of being blocked or flagged as suspicious by website administrators or firewalls. Consequently, your users can effortlessly access the content they require.

Its design emphasizes flexibility and user-friendliness, enabling you to easily customize your user agent according to specific needs and requirements.

How To Use This API?

1- Go to User Agents API and simply click on the button “Start Free Trial” to start using the API.

2- After signing up in Zyla API Hub, you’ll be given your personal API key.

3- Employ the endpoint

4- Press the CAPTCHA to check that you are not a robot, make the API call by pressing the button “test endpoint” and see the results on your screen.

Here’s an example of how the API works:

Most Common Use Cases Of This API

This API is a valuable tool in various applications:

- Web Scraping: You can generate realistic and diverse user agents using this API for web scraping. This helps you extract information from websites without being detected as a scraper.

- Load Testing: During load testing, the API allows you to simulate requests from different devices and browsers. This helps evaluate the performance of your website or application under various conditions.

- Anonymous Browsing: Additionally, protect your users’ privacy by using the API to generate anonymous user agents. These agents hide their true identity and location.

- Content Delivery: With the API, you can generate user agents that mimic specific browsers and devices. This enables targeted content delivery to your intended audience.

- Analytics and Metrics: Also, the API aids in tracking user behavior and gathering data about your website or application. The generated user agents provide valuable information on user demographics, locations, and device usage. This data helps improve your website’s performance and informs future development decisions.