Do you need to crawl a website that has too many blockers? Keep reading, and you’ll find how to handle all of them with a scraping API!

Generally, blocking ads is a way to get rid of annoying publicity. Some companies dedicate themselves to creating pop-ups to sell all kinds of products on the internet. Often, you open your email account or look for something in your browser, and many advertising ads appear. Sometimes, they are so many that you can’t do what you wanted on that page. And on some occasions, they show inappropriate content that you didn’t ask for.

Of course, there are companies that work to stop those emerging stuff on websites. Then, you can do your search in peace. There are services to integrate into a network, app or browser. In that way, you don’t see those pop-ups. Unluckily, that measure is negative for online market businesses.

Conversely, if you want to extract data or images from a specific site, maybe you’ll run into those blockers to protect privacy. Most likely, you have some of them incorporated as an extension on your browser. Everyone wants to prevent themselves from intrusion, even if it doesn’t have bad intentions. But if your job requires information that you can’t obtain from another source, a crawling tool will be fine. Below, you’ll learn why.

How can a scraping API and blockers be related?

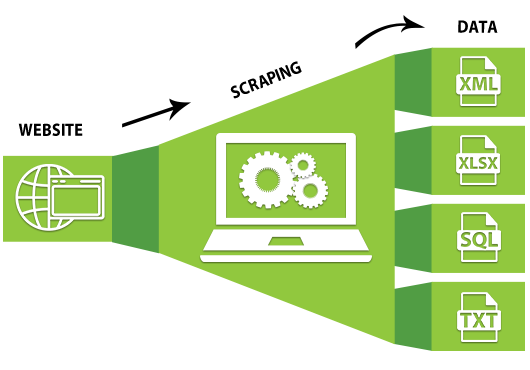

An API is a short form for Application Programming Interface. It’s software that occupies the third position or the middle between two programs or software. An API is halfway from a specific request for data and its sources that allow it to provide it.

They have a plurality of uses, and different sectors of the economy use them. Some of them can obtain data in code from any website you want and collect only the exact items you asked for. Plus, they provide it in archives with pagination, and then, the results will look more comprehensive.

In that extraction data process, APIs utilize proxies that allow access to any page without getting a prohibition. Those proxies work immediately to facilitate entering any site.

Learn to handle blockers with a scraping API like Codery

Honestly, you won’t have to learn how to handle blockers. Codery will do that job for you. It’s part of its system. Also, you can request javascript rendering or any other element you want from a website. Besides, it offers a monitor for your search engine. Therefore, you can have daily control of your page’s progress.

But what you do have to learn is to use Codery‘s API. In the following paragraph, you’ll see how.

Steps to start using Codery‘s API

1- Visit its website: https://www.mycodery.com/.

2- Give an overview to see all the features available.

3- Look above on the right side of the page and tap the ‘Register’ button.

4- Create an account for free.

5- Go to the ‘Documentation’ section to learn how to validate your API key.

You might want to read this article:

https://www.thestartupfounder.com/how-to-be-thriving-with-scrapingbee-alternatives/.